Summary: In the rapidly evolving world of artificial intelligence and robotics, a groundbreaking development is emerging with Vision Language Action (VLA) models. These innovative systems integrate visual perception, language understanding, and action execution into a unified framework, marking a significant leap from traditional AI models that specialize in separate skills. VLAs are designed to perceive their environment, comprehend instructions in natural language, and perform tasks accordingly, revolutionizing the concept of AI assistants. This advancement holds transformative potential across various sectors, from domestic settings to industrial environments, healthcare, agriculture, and even virtual spaces. Imagine a future where VLA-powered robots can acquire complex skills by observing human actions or receiving feedback in plain language. Such capabilities promise to redefine how we live and work, offering unprecedented opportunities for collaboration and efficiency. However, as we stand on the brink of this technological frontier, it is crucial to address the ethical responsibilities that accompany the creation of such powerful and adaptive agents.

Welcome back to another exciting edition of the Deep Dive, where we explore the forefront of technological innovation. Today, we’re uncovering a groundbreaking development in artificial intelligence and robotics: Vision Language Action (VLA) models. These systems promise to revolutionize how we interact with AI by integrating visual perception, language understanding, and action execution into a unified framework. Join us as we explore how VLAs are reshaping the landscape, their potential applications, and the challenges we face in harnessing their full potential.

Understanding Vision Language Action Models

At the core of VLA models is the integration of three previously distinct capabilities: vision, language, and action. Unlike traditional AI systems that specialize in just one of these skills, VLAs combine them into a holistic approach. Imagine an AI system that can perceive its environment, understand instructions in natural language, and perform tasks accordingly. This is the essence of VLAs, marking a significant leap forward in AI development.

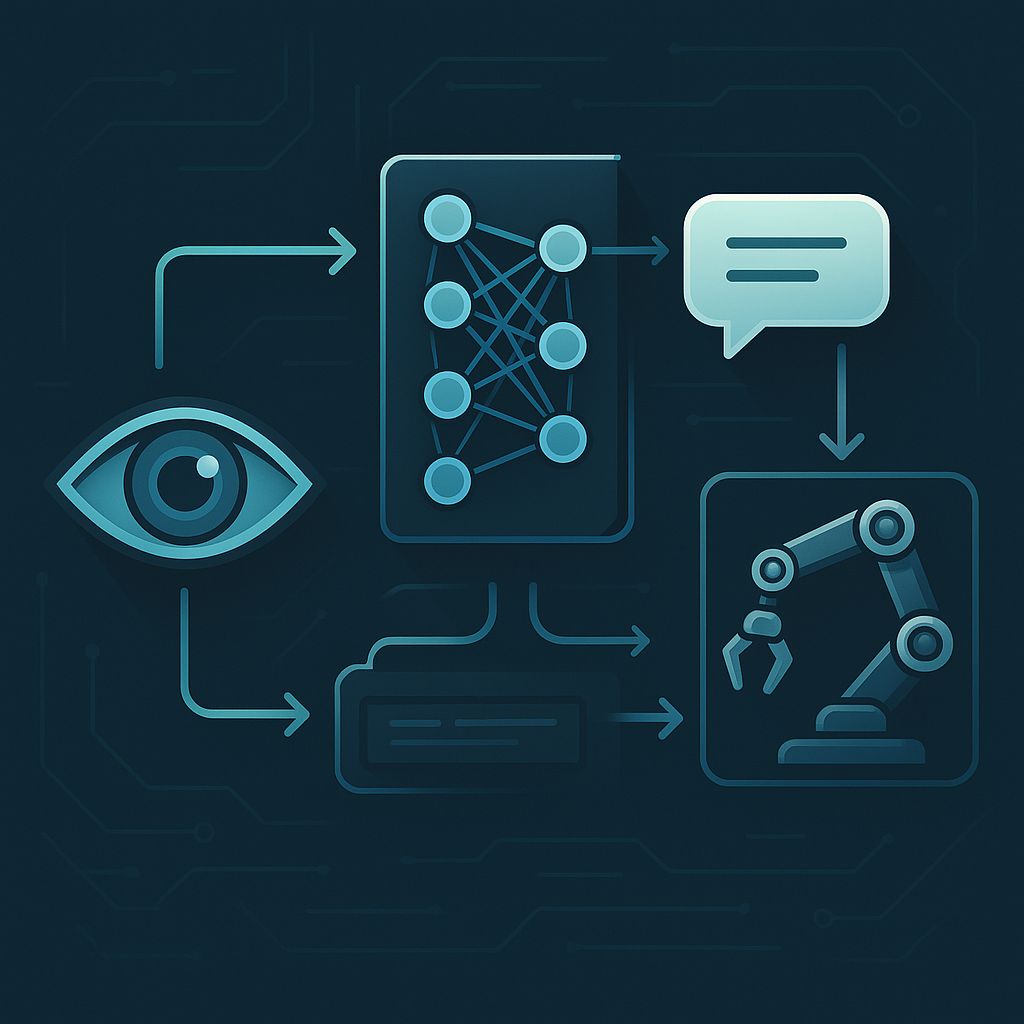

To achieve this integration, VLAs rely on a process called tokenization. This involves translating the visual scene, language commands, and the robot’s physical state into discrete digital units, or tokens. These tokens create a shared space where the model can reason about what it sees, hears, and needs to do, all within a single framework. This process enables the creation of a unified representation of the environment, the instructions, and the intended actions.

- Input Acquisition: Visual data from cameras, natural language instructions, and the robot’s real-time state.

- Tokenization: Converts inputs into vision tokens, language tokens, and state tokens.

- Multimodal Fusion: Uses techniques like cross-attention to unify these tokens into a comprehensive understanding.

- Action Generation: An autoregressive decoder generates action tokens, which are then decoded into motor commands.

Real-World Applications of VLA Models

VLAs are not just theoretical; they are making tangible impacts across various sectors. From humanoid robots to autonomous vehicles, the applications are vast and varied.

- Humanoid Robots: Companies like Figure AI leverage VLAs in their robots, enabling them to understand and execute complex tasks in unstructured environments.

- Autonomous Vehicles: VLAs enhance vehicles by integrating visual data and language nuances, improving navigation and safety.

- Industrial Automation: In factories, VLAs offer flexibility by allowing robots to understand verbal instructions and adapt to changes without reprogramming.

- Healthcare: Surgical robots can interpret voice commands and visual feeds, enhancing precision and patient outcomes.

- Agriculture: Robots use VLAs for tasks like selective harvesting, adapting to natural variations in crops.

- Augmented Reality: VLA systems in AR can provide context-aware navigation, enhancing user experiences.

“VLAs represent a significant step forward, moving robots from specialized tools to potential general-purpose helpers or collaborators.” – AI Robotics Expert

Challenges and the Path Forward

Despite their potential, VLAs face several challenges. Real-time inference, system integration, safety, and data biases are significant hurdles. Ensuring these systems can operate safely and efficiently in real-world environments is paramount. Researchers are actively working on solutions such as faster inference techniques, diverse data collection, and building safety mechanisms into VLA architectures.

- Speed: Ensuring VLAs can make decisions quickly enough for real-time applications.

- Integration: Seamlessly combining multiple AI components into a cohesive system.

- Safety: Guaranteeing reliable and safe operation in dynamic environments.

- Data Biases: Addressing potential biases in training data that could affect model performance.

The future vision for VLAs includes developing massive multimodal foundation models capable of general intelligence, and agentic, self-supervised learning systems that adapt to new tasks by interacting with the world. Safety and ethical considerations remain central to these efforts, ensuring that as these systems become more capable, they align with human values and societal needs.

“Imagine a future where VLA-powered robots can acquire complex skills by observing human actions or receiving feedback in plain language. This promises to redefine how we live and work.” – AI Visionary

In conclusion, Vision Language Action models are paving the way for a new era of AI that bridges perception, language, and action. As we stand on the brink of this technological frontier, the opportunities are vast, but so are the responsibilities. It is crucial that we approach this development with thoughtful consideration of the ethical implications to ensure a future where AI serves as a beneficial and integral part of society.

Summary

In this deep dive, we explored Vision Language Action models, a transformative technology in AI and robotics. VLAs integrate vision, language, and action capabilities, enabling robots to perceive, understand, and act in their environments. Their applications span various sectors, offering promising advancements in robotics and AI. However, challenges such as speed, integration, safety, and biases must be addressed to fully realize their potential. As researchers work towards these goals, the future of VLA models holds immense promise for revolutionizing how we interact with intelligent machines.

Leave a Reply